How to Set Up a Local AI Model with Xcode, Ollama, Qwen2.5-Coder & Alex Sidebar

I wanted to test out Alex Sidebar (eg a desktop client that aims to enable Cursor like features in Xcode) to explore how well a local custom model could perform during development.

Alex Sidebar lets you set up a custom model that the editor can use for generating code. To try this out, I decided to run Alibaba’s Qwen2.5-Coder model locally. This new model developed by Alibaba’s Qwen research team is open-source, Apache 2.0 licensed and optimized for coding tasks.

Here’s how I went about setting it up with Ollama as the local server and ngrok to make it accessible to Alex Sidebar.

Why ngrok?

ngrok is essential here because Alex Sidebar, the client application running alongside Xcode, needs a public URL to interact with a local model server. By default, your local server (Ollama in this case) runs on a private localhost address that’s inaccessible from outside your machine. ngrok solves this by creating a secure tunnel that links your local server to a public-facing URL, making it easy for external applications, like Alex Sidebar, to connect. In the coming weeks, Alex Sidebar will support localhost addresses directly, but for now, all traffic is still routed through their server making this a necessity.

Steps to Set Up Ollama, Qwen2.5-Coder, and ngrok

1. Install Ollama and Pull the Model

To begin, make sure you have Ollama installed, as it will manage and serve the Qwen2.5-Coder model locally.

Download Qwen2.5-Coder:

Once Ollama is installed, pull the model in your terminal using:

ollama pull qwen2.5-coder:32b

This will download the Qwen2.5-Coder model to your machine.

2. Run Ollama Server Locally

With the model downloaded, start up the Ollama server to make it accessible on localhost:

ollama serve

Ollama will begin serving the model at http://localhost:11434, the default port.

3. Install and Configure ngrok

Next, set up ngrok to create a public URL for the Ollama server. This public URL will allow Alex Sidebar to connect to the local model server running on your Mac.

Sign Up and Authenticate ngrok:

Go to ngrok.com to create a (free) account and get an authentication token from the dashboard.

Install ngrok on your machine:

brew install ngrok

Then, authenticate ngrok with your auth token:

ngrok config add-authtoken YOUR_AUTH_TOKEN

Replace YOUR_AUTH_TOKEN with your unique token. This step ensures your ngrok tunnels are secure and linked to your account.

Optional: At this stage if you want to test the Qwen model in interactive mode in your terminal, run:

ollama run qwen2.5-coder:32b

4. Expose the Ollama Server Using ngrok

Now that ngrok is authenticated, expose the Ollama server. Since the server is running on port 11434, run:

ngrok http 11434 --host-header="localhost:11434"ngrok will generate a public URL, like https://randomsubdomain.ngrok-free.app, which securely tunnels traffic to http://localhost:11434. This URL is what you’ll use in the next step for configuring Alex Sidebar.

5. Configure Alex Sidebar to Use the Public URL

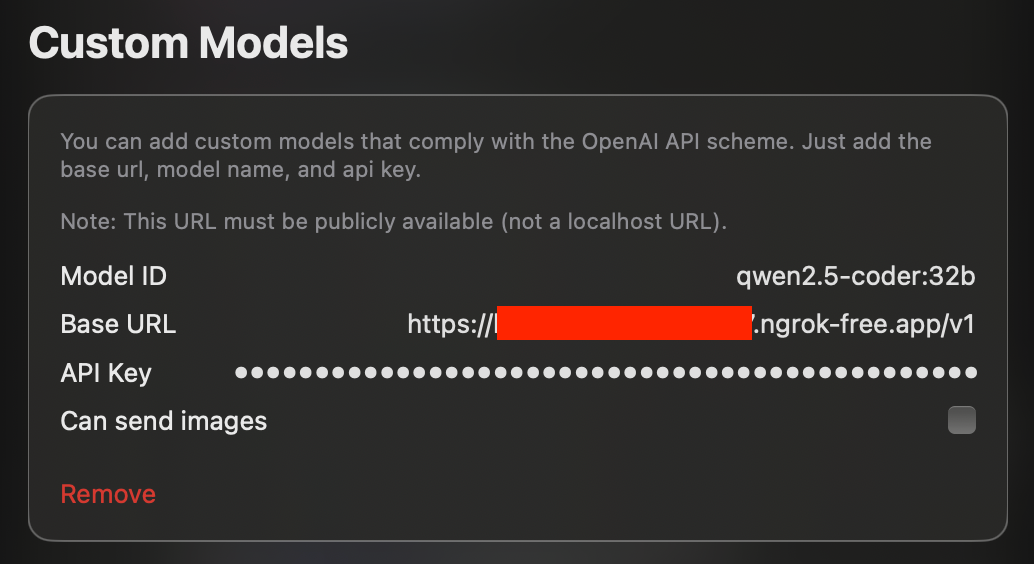

With ngrok up and running, go to Alex Sidebar settings and set up a custom model:

- Model ID:

qwen2.5-coder:32b(the name of the model) - Base URL: Paste the public URL provided by ngrok and append

/v1(e.g.,https://randomsubdomain.ngrok-free.app/v1). - API Key: Paste the authtoken provided by ngrok

This configuration tells Alex Sidebar to route its requests to the ngrok public URL, which then tunnels back to your local Ollama server.

Using Alex Sidebar with Qwen an an M1 Mac

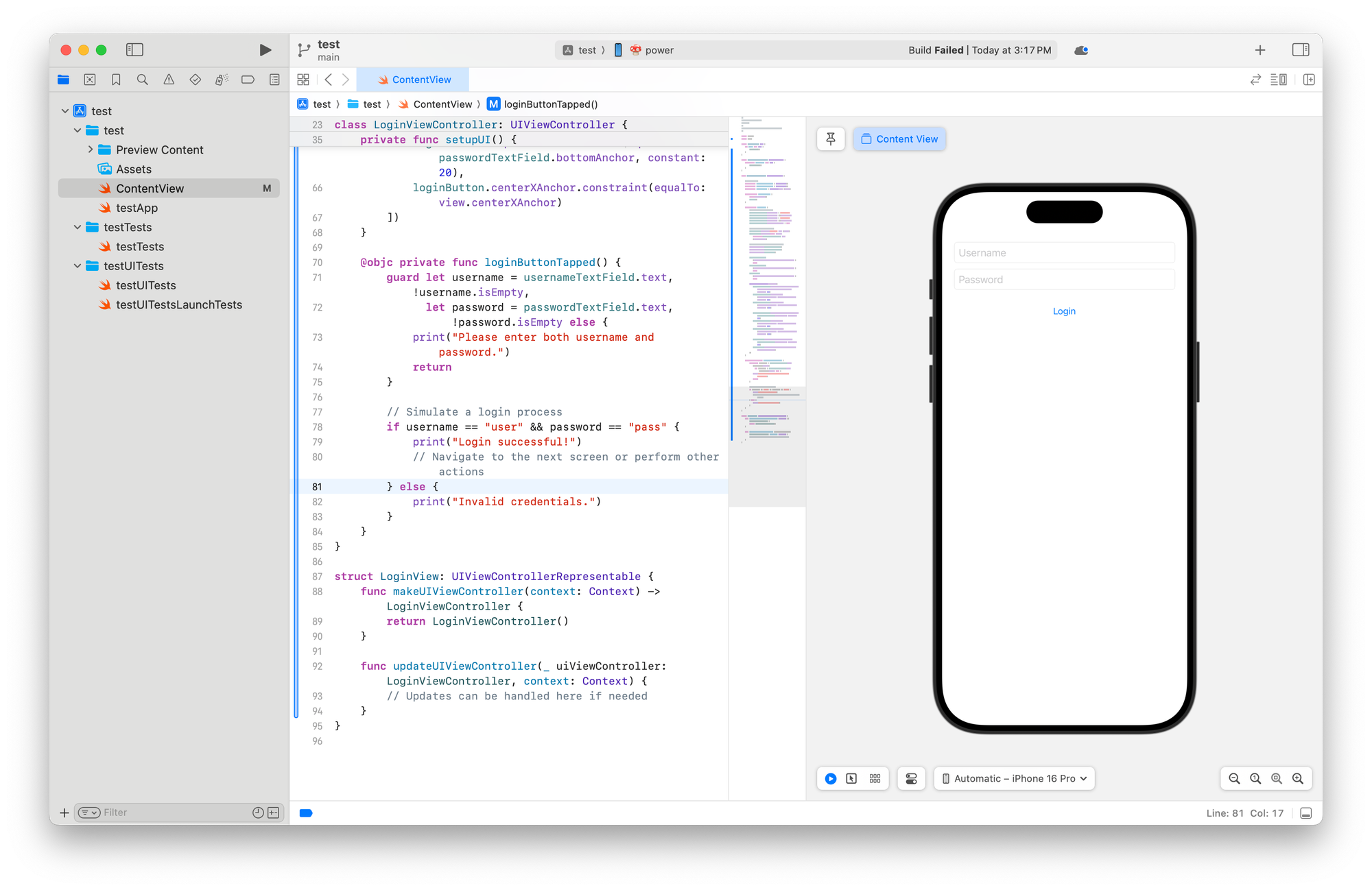

After setting up Alex Sidebar to use the Qwen model, I closed the application. Next, I launched Xcode and created a new Swift iOS app. Then, I reopened Alex Sidebar and asked it to ‘generate a simple login flow in Swift.’

Realtime genAI code on M1 Mac "generate a simple login flow in Swift" using Qwen

After one more prompt, I integrated the LoginViewController with the SwiftUI ContentView and got it working. The local model is a bit slow, so I’ll likely stick with hosted models, but this is an incredible free option! 🤯

Thanks to Daniel Edrisian, founder of Alex Sidebar, for troubleshooting and answering a few questions via Discord!

Additional Notes on Using ngrox

Free accounts can only have 1 active proxy

- To kill any running tunnels via the web you can use their Dashboard, or

- To kill tunnels via terminal run:

pkill ngrok

To validate the Qwen model is being served via the tunnel run the following command in a terminal. Note: update randomsubdomain.ngrok-free.app with the public URL provided by ngrok and replace YOUR_AUTH_TOKEN with the one provided by ngrok.

curl -X POST https://randomsubdomain.ngrok-free.app/api/generate \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_AUTH_TOKEN" \

-d '{

"model": "qwen2.5-coder:32b",

"prompt": "build a little webpage"

}'